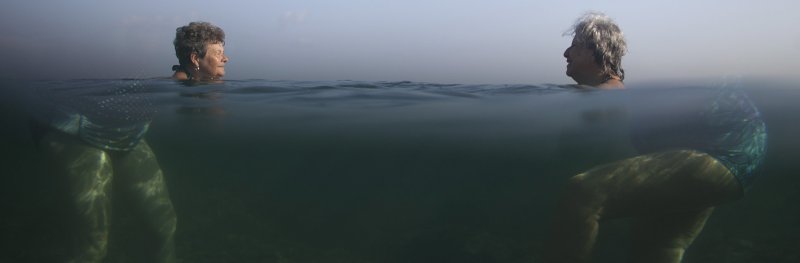

Now you see it

The Book of Days (1864) by the Scottish author Robert Chambers reports a curious legal case: in 1457 in the town of Lavegny, a sow and her piglets were charged and tried for the murder of a partially eaten small child. After much deliberation, the court condemned the sow to death for her part in the act, but acquitted the naive piglets who were too young to appreciate the gravity of their crimes.

Subjecting a pig to a criminal trial seems perverse through modern eyes, since many of us believe that humans possess an awareness of actions and outcomes that separates us from other animals. While a grazing pig might not know what it is chewing, human beings are surely abreast of their actions and alert to their unfolding consequences. However, while our identities and our societies are built on this assumption of insight, psychology and neuroscience are beginning to reveal how difficult it is for our brains to monitor even our simplest interactions with the physical and social world. In the face of these obstacles, our brains rely on predictive mechanisms that align our experience with our expectations. While such alignments are often useful, they can cause our experiences to depart from objective reality – reducing the clear-cut insight that supposedly separates us from the Lavegny pigs.

One challenge that our brains face in monitoring our actions is the inherently ambiguous information they receive. We experience the world outside our heads through the veil of our sensory systems: the peripheral organs and nervous tissues that pick up and process different physical signals, such as light that hits the eyes or pressure on the skin. Though these circuits are remarkably complex, the sensory wetware of our brain possesses the weaknesses common to many biological systems: the wiring is not perfect, transmission is leaky, and the system is plagued by noise – much like how the crackle of a poorly tuned radio masks the real transmission.

But noise is not the only obstacle. Even if these circuits transmitted with perfect fidelity, our perceptual experience would still be incomplete. This is because the veil of our sensory apparatus picks up only the ‘shadows’ of objects in the outside world. To illustrate this, think about how our visual system works. When we look out on the world around us, we sample spatial patterns of light that bounce off different objects and land on the flat surface of the eye. This two-dimensional map of the world is preserved throughout the earliest parts of the visual brain, and forms the basis of what we see. But while this process is impressive, it leaves observers with the challenge of reconstructing the real three-dimensional world from the two-dimensional shadow that has been cast on its sensory surface.

Thinking about our own experience, it seems like this challenge isn’t too hard to solve. Most of us see the world in 3D. For example, when you look at your own hand, a particular 2D sensory shadow is cast on your eyes, and your brain successfully constructs a 3D image of a hand-shaped block of skin, flesh and bone. However, reconstructing a 3D object from a 2D shadow is what engineers call an ‘ill-posed problem’ – basically impossible to solve from the sampled data alone. This is because infinitely many different objects all cast the same shadow as the real hand. How does your brain pick out the right interpretation from all the possible contenders?

Perception is difficult because two different objects can cast the same ‘shadow’ on your sensory system. Your brain could solve this problem by relying on what it already knows about the size and shape of things like hands.

The second challenge we face in effectively monitoring our actions is the problem of pace. Our sensory systems have to depict a rapid and continuous flow of incoming information. Rapidly perceiving these dynamic changes is important even for the simplest of movements: we will likely end up wearing our morning coffee if we can’t precisely anticipate when the cup will reach our lips. But, once again, the imperfect biological machinery we use to detect and transmit sensory signals makes it very difficult for our brains to quickly generate an accurate picture of what we’re doing. And time is not cheap: while it takes only a fraction of a second for signals to get from the eye to the brain, and fractions more to use this information to guide an ongoing action, these fractions can be the difference between a dry shirt and a wet one.

Psychologists and neuroscientists have long wondered what strategies our brains might use to overcome the problems of ambiguity and pace. There is a growing appreciation that both challenges could be overcome using prediction. The key idea here is that observers do not simply rely on the current input coming in to their sensory systems, but combine it with ‘top-down’ expectations about what the world contains.

T his idea is not completely new. In the 19th century, the German polymath Hermann von Helmholtz proposed that the ill-posed problem of generating reliable percepts from ambiguous signals could be solved by a process of ‘unconscious inference’, where observers use tacit knowledge of how the world is structured to come up with accurate visual images. Over the decades, this idea filtered into cognitive psychology, especially through the ‘perceptions as hypotheses’ concept put forth by the British psychologist Richard Gregory in the 1970s. Gregory likened the processes of sensory perception to the scientific method: in the same way that scientists will interpret evidence through the lens of their current theory, our perceptual systems can contextualise the ambiguous evidence they receive from the senses based on their own models of the environment.

More recent incarnations of these ideas suppose the brain is ‘Bayesian’, basing its perceptions on expectation. In 1763, the English statistician and Presbyterian minister Thomas Bayes published a theorem describing how one can make rational inferences by combining observation with prior knowledge. For example, if you hear the pitter-patter of water droplets on a scorching summer’s day, it is more likely that you left your sprinkler on than that it has started to rain. Proponents of the ‘Bayesian brain’ hypothesis suggest that this kind of probabilistic inference occurs when ‘bottom-up’ sensory signals are evaluated in light of ‘top-down’ knowledge about what is and isn’t likely.

As it turns out, patterns of connectivity seen in the brain’s cortex – with massive numbers of backward connections from ‘higher’ to ‘lower’ areas – support these ideas. The concept informs an influential model of brain function known as hierarchical predictive coding, devised by the neuroscientist Karl Friston at University College London and his colleagues. This theory suggests that in any given brain region – for example, the early visual cortex – one population of neurons encodes the sensory evidence coming in from the outside world, and another set represents current ‘beliefs’ about what the world contains. Under this theory, perception unfolds as incoming evidence adjusts our ‘beliefs’, with ‘beliefs’ themselves determining what we experience. Crucially, however, the large-scale connectivity between regions makes it possible to use prior knowledge to privilege some ‘beliefs’ over others. This allows observers to use top-down knowledge to turn up the volume on the signals they expect, giving them more weight as perception unfolds.

Allowing top-down predictions to percolate into perception helps us to overcome the problem of pace. By pre-activating parts of our sensory brain, we effectively give our perceptual systems a ‘head start’. Indeed, a recent study by the neuroscientists Peter Kok, Pim Mostert and Floris de Lange found that, when we expect an event to occur, templates of it emerge in visual brain activity before the real thing is shown. This head-start can provide a rapid route to fast and effective behaviour.

Sculpting perception towards what we expect also allows us to overcome the problem of ambiguity. As Helmholtz supposed, we can generate reliable percepts from ambiguous data if we are biased towards the most probable interpretations. For example, when we look at our hands, our brain can come to adopt the ‘correct hypothesis’ – that these are indeed hand-shaped objects rather than one of the infinitely many other possibilities – because it has very strong expectations about the kinds of objects that it will encounter.

When it comes to our own actions, these expectations come from experience. Across our lifetimes, we acquire vast amounts of experience by performing different actions and experiencing different results. This likely begins early in life with the ‘motor babbling’ seen in infants. The apparently random leg kicks, arm waves and head turns performed by young children give them the opportunity to send out different movement commands and to observe the different consequences. This experience of ‘doing and seeing’ forges predictive links between motor and sensory representations, between acting and perceiving.

One reason to suspect that these links are forged by learning comes from evidence showing their remarkable flexibility, even in adulthood. Studies led by the experimental psychologist Celia Heyes and her team while they were based at University College London have shown that even short periods of learning can rewire the links between action and perception, sometimes in ways that conflict with the natural anatomy of the human body.

Brain scanning experiments illustrate this well. If we see someone else moving their hand or foot, the parts of the brain that control that part of our own body become active. However, an intriguing experiment led by the psychologist Caroline Catmur at University College London found that giving experimental subjects reversed experiences – seeing tapping feet when they tapped their hands, and vice versa – could reverse these mappings. After this kind of experience, when subjects saw tapping feet, motor areas associated with their hands became active. Such findings, and others like it, provide compelling evidence that these links are learned by tracking probabilities. This kind of probabilistic knowledge could shape perception, allowing us to activate templates of expected action outcomes in sensory areas of the brain – in turn helping us to overcome sensory ambiguities and rapidly furnish the ‘right’ perceptual interpretation.

I n recent years , a group of neuroscientists has posed an alternative view , suggesting that we selectively edit out the expected outcomes of our movements. Proponents of this idea have argued that it is much more important for us to perceive the surprising, unpredictable parts of the world – such as when the coffee cup unexpectedly slips through our fingers. Filtering out expected signals will mean that sensory systems contain only surprising ‘errors’, allowing the limited bandwidth of our sensory circuits to transmit only the most relevant information.

A cornerstone of this ‘cancellation’ hypothesis has been studies showing that there is less activity in the sensory brain when we experience predictable action outcomes. If we feel touch on our skin or see a hand move, different regions of the somatosensory or visual brain become more active. However, early studies found that when we touch our own skin – by brushing our hands or touching our palms – activity in these brain areas is relatively reduced, compared with when these sensations come from an external source. A similar suppression of activity is found in the visual brain when we observe hands performing movements that match our own.

My colleagues and I investigated how these prediction mechanisms work. In a recent study I conducted with Clare Press at Birkbeck, University of London – alongside the cognitive neuroscientists Sam Gilbert and Floris de Lange – we placed volunteers in an MRI scanner and recorded their brain activity while they performed a simple task. We asked them to move their fingers and observe an avatar hand move onscreen. Whenever they performed an action, the hand on the screen either made a synchronous movement that could be expected (moving the same finger) or unexpected (moving a different finger). By looking at patterns of brain activity in these two scenarios, we were able to tease apart how expectations change perceptual processing.

In short, our analysis revealed that there was more information about the outcomes that participants had seen when they were consistent with the actions they were performing. A closer look found that these ‘sharper signals’ in visual brain areas were accompanied by some suppressed activity – but only in parts sensitive to unexpected events. In other words, predictions generated during action seemed to edit out unexpected signals, generating sharper representations in the sensory brain that are more strongly weighted towards what we expect.

These findings suggest that our expectations sculpt neural activity, causing our brains to represent the outcomes of our actions as we expect them to unfold. This is consistent with a growing psychological literature suggesting that our experience of our actions is biased towards what we expect.

One compelling demonstration came from a 2007 study led by the psychologist Kazushi Maruya in Japan using a technique called binocular rivalry. In binocular rivalry experiments, an observer is placed in an apparatus that presents different images to the left and the right eyes. When these images conflict, the observer’s perceptual experience is typically dominated by one of them, with occasional fluctuations between the competing alternatives. Maruya and colleagues created the experience of visual competition by presenting observers with a flickering black-and-white pattern to the left eye, and a moving sphere to the right. Intriguingly, the researchers found that when they yoked the moving sphere to actions that the observers were performing, this image was more likely to ‘win out’ in the competition. Conscious experience here was dominated by the predictable action outcome.

Experiments such as Maruya’s join a host of others suggesting that our perceptual experience is biased by the actions we perform. For example , key presses performed by pianists can bias whether they hear a sequence of musical notes as ascending or descending in pitch. What’s more, how we experience the passage of time can be manipulated by our actions – when we move more slowly, other events seem to last longer – and we tend to see ambiguous motion move in directions consistent with our actions. Since our expectations will typically come true, sculpting perception in line with our beliefs can lead to a more robust picture of how we influence the environment around us.

O ne of the most fascinating possibilities is that our predictive machinery might play an important role in helping us interact with the social world. After all, human beings appear to obey a set of rules in the way that they greet each other, take turns in conversation, and respond to each other’s behaviour. One of the most ubiquitous, structured ways that people respond to each other is through imitation, defined by cognitive scientists as an instance in which an observer copies the bodily movements of a model – the gestures used, the gait of the walk and so on; if you see your interlocutor rub her face or shake her foot, you are likely to do the same.

A range of studies have suggested that the experience of being imitated can have important consequences for the quality of our interactions. Having our actions copied can increase the trust, rapport and affiliation we feel towards those who do the copying. One evocative example – a 2003 study led by the psychologist Rick van Baaren at Radboud University of Nijmegen in the Netherlands – found that waiters who were instructed to imitate diners received bigger tips than those who weren’t.

But while the experience of being imitated can act as a powerful social lubricant, we can perceive how others behave towards us – like everything else – only through the imperfect veil of our sensory systems. The prosocial potential of being imitated will be entirely frustrated if our brains can’t register that these reactions occurred. However, this problem could be overcome if we generate predictions about the social consequences of our actions in the same way that we furnish expectations about the physical outcomes of our movements, using our predictive machinery to make other people easier to perceive.

Press and I explored this idea in a 2018 study investigating whether predictive mechanisms deployed during action could influence how we perceive imitation in others. We did indeed find a signature of prediction: the perception of expected action outcomes looked more visually intense. This signature persisted for seconds after our own movements, suggesting our predictive mechanisms are well-suited to anticipating the imitative reactions of other people.

This kind of enhancement could be especially important in demanding sensory environments: for example, making it easier to spot the friend who is waving back to us in a crowded room. Another relatively underexplored possibility is that such predictions could cause us to perceive other people as being more similar to ourselves. If moving slowly makes the rest of the world seem slower too, the slow, sluggish movements we make when we are feeling sad could bias us to perceive others as also being slower and more downbeat.

Of course, sometimes using prediction to construct our experience can be a double-edged sword. It is clear that this blade cuts both ways when you realise that our expectations will sometimes not come true. If you produce a forceful lift to move an empty teapot you thought was full, the hollow vessel will accelerate much faster than you expected. By the same token, if you tell a misjudged joke, you might be met with a sea of bemused faces rather than the laughs you were hoping for.

It seems that in these scenarios it would be maladaptive for us to reshape our perceptual experiences to make these events appear more like our expectations: to misperceive the teapot as moving slower than it really is, or to edit the expressions of our social partners so that they seem more amused, simply because these were what we predicted a priori . Such misperceptions are likelier to occur when the sensory world provides poorer information and expectations might be given more weight. These occasional misperceptions could be the price we pay for a process that generates reliable experiences more often than not.

Nonetheless, it is easy to see how such misperceptions could undermine us. Many of our social, cultural and legal institutions depend on the idea that human beings generally know what they are doing, and therefore can be held responsible for what they have done. Though it would caricature the science to suggest that human beings misperceive their actions most of the time, there might be cases where misperceptions induced by what we expect could have important consequences. For example, a well-practised physician might have very strong expectations about how a patient will respond to a simple procedure (eg, a lumbar puncture) that could bias them to misperceive unusual reactions when they do occur. Does the moral or legal status of the doctor change if she is biased to misperceive her patient’s symptoms? Does this issue become more complicated if we consider that any misperceptions could be a result of the doctor’s expertise?

While prediction has its dark side, consider how difficult the world would be without it. This thought has long occupied psychiatrists, who have suggested that some of the unusual experiences seen in mental illness might reflect disruptions in the ability to predict.

A particularly curious set of cases are ‘delusions of control’ seen in some patients with schizophrenia. Patients suffering from such a delusion report a distressing experience where they feel as though their actions are driven by an external alien force. One such patient described this anomalous experience to the British psychiatrist C S Mellor thus : ‘It is my hand and arm which move, and my fingers pick up the pen, but I don’t control them. What they do is nothing to do with me.’

In line with such vivid case reports, experimental studies have revealed that schizophrenic patients can have difficulties recognising their actions. For example, in a 2001 experiment led by the psychiatrist Nicolas Franck at Lyon University Hospital in France, schizophrenic patients and healthy control volunteers were shown video feedback of their actions that could be altered in various ways – such as spatially distorting the footage or adding temporal delays. The researchers found that the patients were poorer at detecting these mismatches, suggesting that they had a relatively impoverished perception of their own actions.

This deficit in action monitoring and concomitant delusions could arise because these patients experience a breakdown in the mechanisms that allow them to predict the consequences of their movements. These could lead to a change in how action outcomes are experienced, which in turn predisposes the patients to develop bizarre beliefs. In particular, losing the ‘sharpening’ benefits of top-down prediction might leave people with relatively more ambiguous experiences of their actions, making it hard to determine what has or has not happened as a result of their behaviour. While this ambiguity might be acutely distressing in its own right, being afflicted by such unusual experiences for a prolonged time could contribute to an individual’s ‘delusional mood’ – the sense that strange things are happening that require a strange, and perhaps delusional, explanation .

In the end, it seems that there is an emerging view from psychology and neuroscience that our expectations play a key role in shaping how we experience our actions and their outcomes. While integrating predictions into what we perceive could be a powerful way to monitor our actions in an inherently ambiguous sensory world, this process can cause us to misrepresent the consequences of our behaviour when our expectations don’t come true. Such fictitious experiences can undermine the idea that we have a crystal-clear insight into our own behaviour that separates us from the naive Lavegny pigs. When it comes to our actions, we might see what we believe, and on occasion, we too might know not what we do.

But there is a large distinction between 'how' and 'why'. The article presents research on the control functions of the brain that shows how the brain and autonomous nervous system performs control functions. Control engineers can mathematically describe these control functions.

But that does not inductively translate into describing why people engage in directed activities. The seeded article falls into the trap of inductive irrationality with the statement:

"Many of our social, cultural and legal institutions depend on the idea that human beings generally know what they are doing, and therefore can be held responsible for what they have done."

Our social, cultural, and legal institutions consider both the 'how' and the 'why'. The legal system, in particular, must show evidence of means plus intent. So, the research presented in the seed is only telling half the story. And the half that is presented doesn't apply to the other half.